Keyword [ChestX-ray14]

Yao L, Poblenz E, Dagunts D, et al. Learning to diagnose from scratch by exploiting dependencies among labels[J]. arXiv preprint arXiv:1710.10501, 2017.

1. Overview

1.1. Motivation

- pre-trained ImageNet models may introduce unintended biases which are undesirable in a clinical setting

- there exists dependencies among labels

- the need for pre-training may be safely removed when there are sufficient medical data available

In this paper

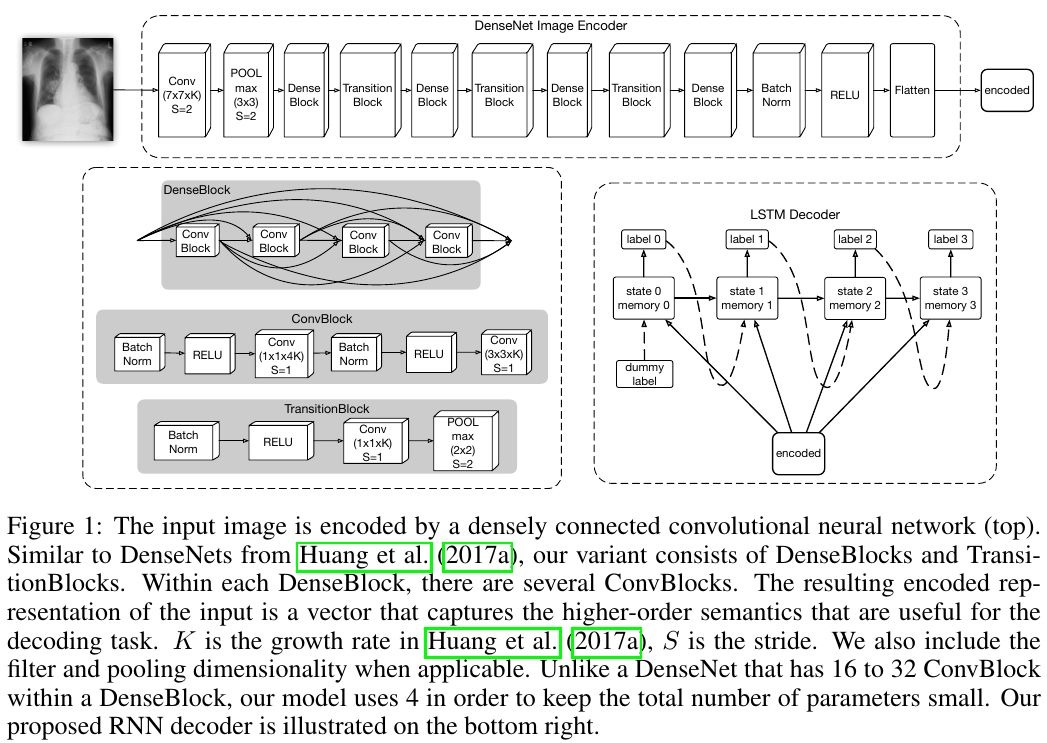

- DenseNet Image Encoder

- LSTM Decoder. using LSTM to leverage interdependencies among target lables

- without pre-training

1.2. Dependency

- complex interactions between abnormal patterns frequently have significant clinical meaning that provides radiologist with additional context

- cardiomegaly (心脏扩大) is more like to additionally have pulmonary edema (肺水肿).

- edema futher predicate the possible presence of both consolidation (实变) and a pleural effusion (积液)

1.3. Related Work

1.3.1. Model Inter-label Dependencies

- loss function implicitly represent dependencies

- receive a subset of the previous prediction

- RNN

1.3.2. OpenI dataset

- with 7,000 images, smaller and less representative than ChestX-ray8

2. Algorithm

2.1. Model

2.1.1. Encoder

2.1.2. Decoder

- initial state based on x_enc

- f_h0, f_c0 with one hidden layer

- LSTM

- y. GT with fixed ordering

2.2. Loss Function

3. Experiments

3.1. Details

- randomly split. 70%, 10%, 20%

- ChestX-ray8 noticed insignificant performance difference with different random split

- 512x512

- randomly translate 4 directions by 25 pixel

- randomly rotate [-15, 15]

- randomly scale [80%, 120%]

- early stop based on valiadation

- weighted cross-entropy loss

- without pre-trained

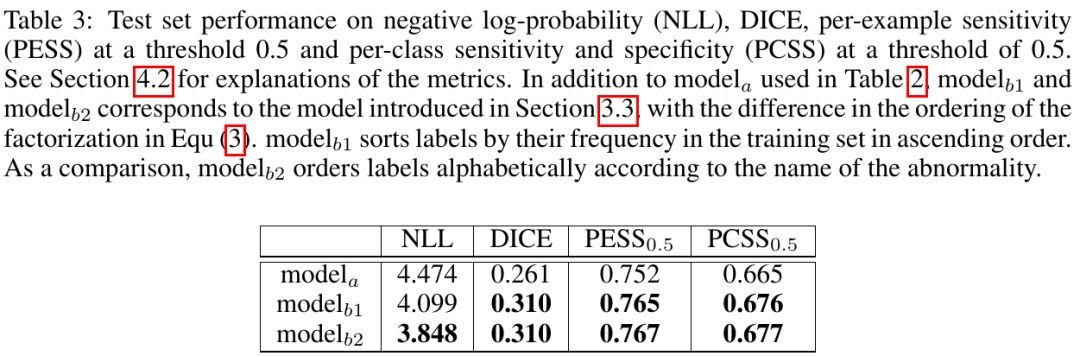

3.2. Metric

- NLL

- AUC

- DICE

- Per-example sensitivity and specificity (PESS)

- Per-class sensitivity and specificity (PCSS)

3.3. Comparison